Accounting solutions platform Sage announced that its products will come with a new “AI Trust Label” meant to provide customers with clear, accessible information about the way AI functions across its product line.

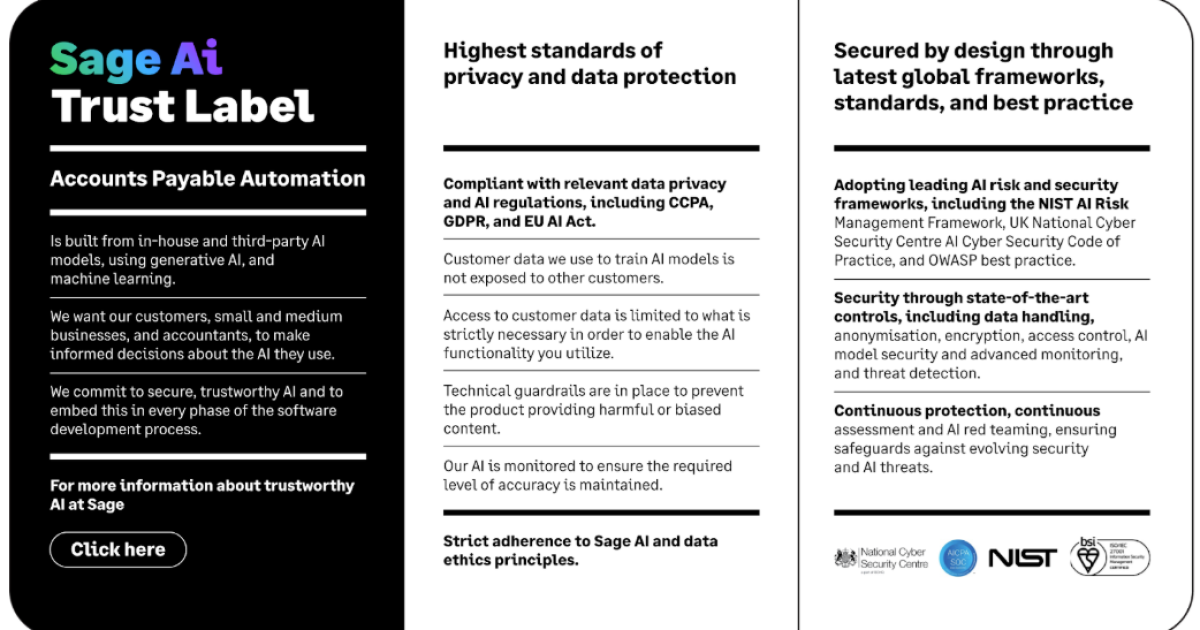

The Sage AI Trust Label is designed as a shorthand symbol that communicates the company’s commitment to safety, ethics, and responsibility in its AI systems, assuring customers that any Sage product featuring this label adheres to specific criteria, frameworks, and safeguards. For instance, it would communicate that the AI solution complies with global standards such as the NIST AI Risk Management Framework; that ethical principles like fairness, explainability, and security are embedded into the design; and that Sage rigorously upholds data privacy, user consent and governance protocols.

In this respect, Sage Chief Technology Officer Aaron Harris said it could be seen as both a “quality seal” as well as an “ingredients” or facts label.

“We’re being transparent with our customers on the facts around AI in each product, from data sourcing to machine language models to how we train the AI. At a glance, the AI Trust Label gives users a clear, unified symbol across all Sage products that are built with responsible ethics in mind. And if they want to dig deeper, the Sage Trust and Security Hub lays out exactly how each product handles customer data, keeps it safe, and stays compliant—so they can use AI with confidence,” he said in an email.

The label itself is designed to be both visible and non-intrusive. Customers will encounter it in key interface areas such as settings, dashboards, help menus, onboarding flows, and during product updates. In some cases, it may appear as a persistent icon—like in the upper-right corner of the interface—while in others, it may surface contextually when users engage with AI features.

“This immediacy is central to Sage’s approach: transparency isn’t buried in documentation. It’s embedded in the experience,” said Harris.

Later this year, Sage will begin rolling out the AI Trust Label across selected AI-powered products in the UK and US. Customers will see the label within the product experience and have access to additional details via Sage’s Trust & Security Hub. The label was designed based on direct feedback from SMBs and reflects the signals they said they need to build confidence in using AI tools.

Calls for AI certification system

Sage also called on industry and government players to develop a transparent, certified AI labelling system that encourages wider adoption of the technology. Sage’s own AI Trust Label is designed as both a proof-of-concept and a potential foundation for a broader certification framework with transparency at its core.

Harris said that while things are still in the early stages, Sage is engaging with industry peers and monitoring regulatory developments closely with the goal being to contribute meaningfully—whether through direct collaboration, convening stakeholders, or supporting emerging standards that align with its values. Sage has already initiated conversations with key players and plans to share its own framework as a starting point for broader discussions. Sage, he said, is taking a lead role in advocating for trustworthy AI adoption across SMBs and beyond.

Ideally, according to Harris, such a system would require developers to demonstrate adherence to key principles, including transparency (Clear documentation of how AI models function, make decisions, and use data); ethics (Compliance with fairness, bias mitigation, and inclusivity standards); security (Robust safeguards against data breaches and misuse) and accountability (Mechanisms for monitoring, auditing, and addressing risks throughout the AI lifecycle.) Certification could also include independent validation of these practices by third-party auditors or regulatory bodies.

Harris said Sage envisions a certification system akin to NIST AI Risk Management Framework compliance, where independent third parties inspect and certify AI solutions based on established criteria. Alternatively, it could also resemble professional licensing systems (e.g., CPA licenses), where governmental or industry bodies issue certifications after rigorous evaluation. Such a system would ideally combine technical audits, ethical assessments, and ongoing oversight to ensure long-term trustworthiness.

While he conceded that individual developers theoretically could create their own labels as Sage has done, a unified industry-wide certification system would better ensure consistency, transparency, and trust across industries.

“When standards aren’t aligned, it creates confusion, especially for small and mid-sized businesses that don’t have the resources to navigate a patchwork of rules. A coordinated effort between industry and government would establish universally recognized benchmarks for ethical AI development, encourage broader adoption of AI by reducing uncertainty around its safety and reliability, and foster collaboration and innovation across industries… In the accounting field, where data sensitivity, regulatory demands, and financial decision-making converge, having a clear AI labelling framework can support automation and insights without compromising trust,” he said.

Blog Post1 week ago

Blog Post1 week ago

Personal Finance1 week ago

Personal Finance1 week ago

Economics1 week ago

Economics1 week ago

Economics1 week ago

Economics1 week ago

Economics4 days ago

Economics4 days ago

Finance4 days ago

Finance4 days ago

Economics4 days ago

Economics4 days ago

Accounting4 days ago

Accounting4 days ago